Gen AI Buildouts Spur Tech Infrastructure Boom

Key Takeaways

- Ambitious capital spending on generative AI projects by hyperscalers, which we view as a once-in-a-decade cycle, represents a meaningful tailwind for technology infrastructure providers supplying the picks and shovels to run large language models.

- Cloud infrastructure software companies play a critical role in enabling AI workloads by providing specialised computing, data management and workload monitoring services to enterprise customers.

- The processing needs of inference models like the leading chatbots require more specialised chips than traditional enterprise applications, leading to a renaissance in custom silicon development by semiconductor makers.

Hyperscaler Capex Growing Steadily

Since the first public availability of ChatGPT three years ago, generative artificial intelligence (Gen AI) has expanded rapidly across consumer and business markets, both among public traded companies and privately held firms. Cloud hyperscalers were among the first providers of Gen AI services and, judging by their capital spending commitments, they should remain the drivers of Gen AI adoption. While use cases, commercial monetisation and potential disintermediation of certain parts of the economy remain at an early stage, large language models (LLMs) are proliferating, and we believe industries providing the picks and shovels to power Gen AI workloads offer compelling investment opportunities today.

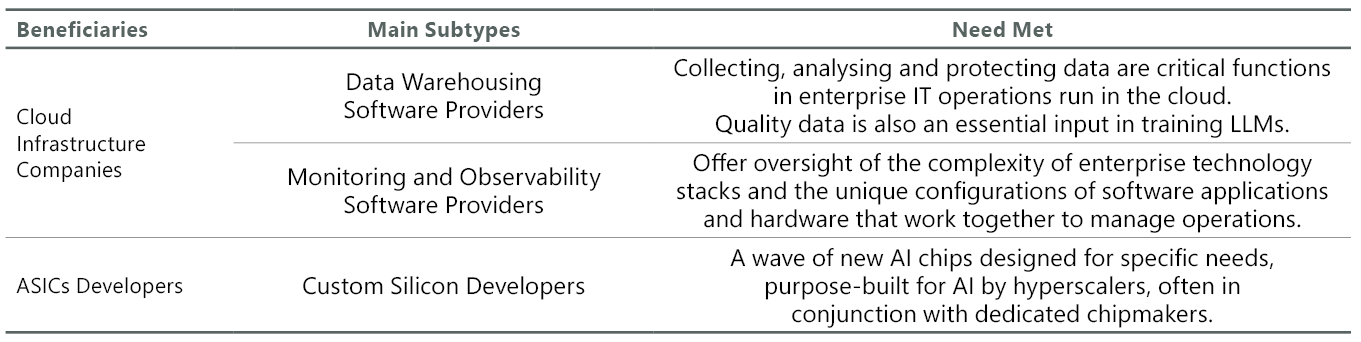

We broadly describe these industries as technology infrastructure providers, as they bring together the data and processing power needed to make LLMs function. More specifically, we see cloud infrastructure software companies and developers of application-specific integrated circuits (ASICs) — custom silicon solutions — as near- to medium-term beneficiaries of ambitious Gen AI capex. Both industries are seeing improving fundamentals and new growth avenues thanks to Gen AI — a trend that appears to be in its early innings.

Exhibit 1: Beneficiaries of Gen AI Capex

Source: ClearBridge Investments.

In 2025, the four major hyperscalers — Microsoft, Amazon, Alphabet and Meta Platforms — are forecast to spend a combined $378 billion, up 65% compared to 2024 and a meaningful increase from the start of the year (Exhibit 2), while projections for 2026 indicate steady spending growth. Not included in these totals are multibillion budgets by rising cloud platforms like Oracle, CoreWeave and privately held names. Such commitments, which we view as a once-in-a-decade capex cycle, represent a meaningful tailwind for technology infrastructure providers. And the fact that the capex is coming from these mega cap companies’ own cash flows rather than through new debt issuance gives us confidence in Gen AI as a secular evolution in computing.

Exhibit 2: Hyperscaler Capex Keeps Increasing

As of 31 August 2025. Source: Company reports and statements; ClearBridge Investments.

Infrastructure Software Recovering

Cloud infrastructure companies play a critical role in enabling AI workloads by providing the foundational computing, storage and networking resources needed to train, deploy and scale LLMs. Many enterprises initially attempted to build AI capabilities in-house with ad-hoc tools, but the complexity and high failure rates of these DIY efforts have driven a shift back toward proven platforms from specialised vendors. The expertise and ecosystems developed by cloud infrastructure firms for running AI at scale have become preferable to “home grown” solutions, especially as companies realise that reliable performance and security are paramount for AI projects.

The evolving business model at Oracle illustrates the high demand for AI infrastructure that can effectively run training models. The company’s Oracle Cloud Infrastructure has emerged as a viable fourth hyperscaler with server configurations designed to scale quickly, with advanced networking capabilities and cost savings compared to competitors. Oracle is a key partner in the U.S. government’s Stargate AI initiative, has won several large commercial contracts recently with OpenAI and xAi and works with Meta to train its Llama models. The company should also see tailwinds from its abundant supply of sought-after Nvidia graphic processing units (GPUs), key components in its newest AI superclusters configurations that are linked together with extremely fast interconnects, enabling the kind of large-scale distributed training that models require. Oracle’s transformation highlights how the cloud market is expanding to new players building cloud infrastructure finely tuned for the unique needs of AI.

Rapid AI adoption is turbocharging digital transformation as companies modernise IT systems and migrate more workloads from on-premise data centres to the more AI-applicable cloud. This wave of cloud migration creates knock-on demand for infrastructure software and services that can manage complex, distributed applications. The result is a rising tide for both the major hyperscale clouds (Microsoft Azure, Amazon Web Services, Google Cloud) and a cadre of “vertical” best-of-breed infrastructure software players that focus on specific needs. Many enterprises now use a combination of both — leveraging hyperscalers for base cloud services and supplementing them with independent software vendors for specialised functions like data analytics or monitoring. In fact, the average large business today uses hundreds of different software applications to get work done, highlighting the complexity of modern tech stacks.

Many infrastructure software makers feature consumption-based business models as opposed to subscription models offered by most application software vendors. Consumption models enable customers to only pay for what they use, providing flexibility and enabling prioritisation in IT budgets. After a soft period in 2024 when many enterprises were optimising costs and digesting their cloud spend, consumption-driven providers are now seeing re-accelerating growth in their quarterly results, boosted by strong reception to new AI product offerings and increased usage.

Infrastructure Software: Data Warehousing

Collecting, analysing and protecting data are critical functions in enterprise IT operations run in the cloud. Quality data is also an essential input in training LLMs. Data warehousing software makers fill this need with cloud-based architectures that enable customers to organise their data for the purpose of advanced analytics and GenAI use. Snowflake, for instance, enables enterprises to unify siloed data and run large-scale analytics or training workloads efficiently across multiple cloud environments. The company has reported greater uptake of these and newer AI and machine learning offerings among its larger customers as incremental drivers of its greater than 30% year-over-year revenue growth. Privately held Databricks is also a critical piece of this ecosystem, furthering advanced AI and industry standards around data lakes, data storage and AI. The company’s forays into supporting PostgreSQL relational database environments (via internal development and, most recently, acquisition) significantly expands its purview and future total addressable market (TAM) and should also drive consumption.

Infrastructure Software: Monitoring and Observability

Where a corporate IT department may have managed a handful of third-party vendors in the past, today the number of specialised platforms and applications can run into the hundreds. The complexity of enterprise technology stacks and the unique configurations of software applications and hardware that work together to manage operations underscore the need for comprehensive oversight of all these functions. Monitoring and observability software providers offer that oversight, helping customers monitor and analyse IT performance as well as identify issues and threats. Observability is an underpenetrated market, and we see continued growth as organisations increasingly reliant on digital infrastructure expand the number of applications monitored. We believe LLM observability, a rapidly growing market due to the acceleration of Gen AI workloads, creates a new vector for growth for these stocks not reflected in fundamental estimates. While competition is high due to the presence of lower-priced, open-source data monitoring vendors and some enterprise customers insourcing some observability functions, we believe companies with the end-to-end platforms to consolidate multiple observability vendors, like Datadog and Dynatrace, can sustain high teens to low 20s percent revenue growth for multiple years.

Exhibit 3: Monitoring to Expand Across More Applications

As of 31 December 2024. Source: KeyBanc Capital Markets, June and December 2024 CIO surveys.

In our view, larger software companies that host customers on their own cloud infrastructure are also well-suited in the near-to-intermediate term for an ongoing capex cycle. Capex at Microsoft, for example, is expected to shift back toward revenue-driving activities such as chip purchases from recent spending on land and buildings for data centres, which should drive consumption and enable the company to recognise in its results some of the very large Gen AI bookings it has generated to date.

Custom Silicon to Power Next Wave of AI Buildouts

The unprecedented compute demands of Gen AI have not only supercharged cloud capex, they have also upended the semiconductor landscape. Serving millions of intelligent queries through inference models like ChatGPT and Google Gemini requires far more specialised chips than traditional enterprise applications. This has led to a renaissance in custom silicon development, marked by the design of “XPUs,” where X can stand for any accelerated processor (GPU, CPU, etc.) tailored to AI. While Nvidia’s GPUs and its complete ecosystem of chips and software have led the company to maintain a large market share lead, other semiconductor makers also play critical roles in developing custom silicon as a complement or alternative to Nvidia. The result is a wave of new AI chips designed for specific needs — some targeting training giant models, but the majority optimised for inference models already in use and many integrating novel approaches to boost performance or efficiency.

Exhibit 4: Custom Silicon a Small but Rapidly Growing Part of AI Chip Market

*Merchant consists of Nvidia and Advanced Micro Devices processors. **Custom consists of Broadcom and Marvell Technology silicon.

As of 26 September 2025. Sources: Visible Alpha (Street Estimates), ClearBridge Investments.

Exhibit 5: Custom Silicon Sales Just Starting to Ramp

*Merchant consists of Nvidia and Advanced Micro Devices processors. **Custom consists of Broadcom and Marvell Technology silicon.

As of 26 September 2025. Sources: Visible Alpha (Street Estimates), ClearBridge Investments.

These ASICs are purpose-built by the hyperscalers themselves, often in conjunction with dedicated chipmakers. Broadcom, for example, is developing ASICs for the high performance needs of customers that include Google, Meta and OpenAI. This strategy of partnering rather than competing with the hyperscalers has proved fruitful: Broadcom is capturing an increasing share of AI semiconductor spend as those giant customers ramp up their data centres. Broadcom’s AI chip revenues are expected to grow sharply as these projects scale, validating its approach of selling to all the major AI players rather than betting on one end-product.

While Broadcom is the clear market share leader in custom silicon, we believe the TAM is large and growing rapidly enough to support multiple players. ARM Holdings makes custom CPUs that go into some of Nvidia’s GPU configurations and has benefited significantly from this relationship. Marvell Technology also designs chips primarily for inference models, having progressed significantly in its intellectual property and processing capabilities and counting Amazon and Microsoft among its core customers. In addition to chip design, Marvell’s lineup of network connectivity solutions (like high-speed ethernet adapters and switches) makes it a sought-after partner to build the plumbing for AI supercomputers. The company’s networking products are crucial in stitching together AI clusters — for example, high-bandwidth switches and optical interconnects to move data between thousands of processors in parallel.

As AI models become larger and more distributed, we believe networking is becoming as important as processing speed, and Marvell’s solutions address that critical bottleneck. Like Broadcom, we view Marvell as well suited to participate as hyperscalers diversify their semiconductor spending away from just buying off-the-shelf GPUs. Importantly, these custom silicon developers are targeting an inference market that now represents the bulk of new net AI spending and is growing at a much faster pace than the training market.

Custom silicon developers also make networking chips, switches and interconnect devices to meet the higher-bandwidth data movement and massive storage requirements of AI workloads. These companies partner with a number of supporting players in the semiconductor capital equipment, electronic design automation and contract manufacturing areas that supply the high-performance silicon wafers and design tools to meet the increased chip complexity in the Gen AI era.

Conclusion

The development and adoption of Gen AI is a secular growth trend still in its early innings. It remains to be seen which parts of the technology universe will ultimately benefit or be challenged by Gen AI, but we believe consistent capex growth by hyperscalers creates a positive demand environment for both infrastructure software and custom silicon developers. Earnings and free cash flow growth rates for mega cap hyperscalers remain healthy, which should continue to support the growth outlook for more vertically focused companies supporting Gen AI buildouts.

Related Perspectives

Improvers Benefit from a Broadening Market

Q4 2025 Global Value Improvers Strategy Commentary

A broadening global rally and improving fundamentals across key holdings supported strong fourth-quarter performance for Global Value Improvers.